Microsoft Azure - elearning.fh

Werbung

Cloud Computing

Microsoft Azure

Upper Austria University of Applied Sciences

School of Informatics, Communications, and Media

© J. Heinzelreiter, W. Kurschl

www.fh-hagenberg.at

Version 2.4, 2015

A Brief History of Microsoft Azure

2006: Project started to explore a new services platform (“Red Dog”).

Analysis of existing Microsoft online services (Hotmail, …)

Similar Problems: time-consuming management of (virtual) machines, inefficient

usage, no sharing of resources.

Implementation of core components: hypervisor, fabric controller, distributed

storage system, developer tools.

October 2008: Launch of Windows Azure CTP (Blobs, Queues, Tables, Roles),

Medium Trust

March 2008: SQL Azure (DaaS)

November 2009: Full trust, native code support, support for PHP and Java

February 2010: General availability, .NET 4.0, VM Roles

June 2012: Preview for IaaS (Windows and Linux Images), Web Sites, etc.

2013: General Availability of IaaS, Web Sites, Mobile Services, HDInsight,

Preview of WebJobs

April 2014: Windows Azure renamed to Microsoft Azure

2014/15: Preview of Azure Machine Learning, Azure DocumentDB

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 2

Data Centers

Traditional Data Centers – e.g.

Microsoft Dublin Data Center

27,900 m2

22.2 Megawatt (final phase)

Container-based Data Centers – e.g.

Chicago Data Center

65,000 m2

60 Megawatt (final phase)

Containers with up to 2500 servers

CLC3: Azure

© www.datacenterknowledge.com

© J. Heinzelreiter, W. Kurschl

Slide 3

Data Center Locations

18 Azure data centers all over the world.

There are at least two data centers per geo-political region.

100,000’s of servers.

CDN nodes in 24 countries (Content Delivery Networks)

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 4

Virtualization – The Azure Hypervisor

Virtualization allows several virtual machines to run simultaneously on

the same physical machine:

VMs share the resources of underlying hardware.

Hardware abstraction: Services run in an homogeneous

environment.

The hypervisor (virtual machine manager) provides the infrastructure

to concurrently run multiple OS on one machine.

Responsible for fair allocation of resources (CPU, I/O, etc.)

Isolation of VMS.

The Azure hypervisor is optimized for the hardware in Microsoft’s data

centers (different from Hyper-V).

The Azure kernel is aware of and optimized for the hypervisor

(enlightened kernel).

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 5

Architecture of the Azure Hypervisor

Physical Machine

Host Partition

Guest Partition 1

Guest Partition n

Virt. Machine

Management

Application

Application

Guest OS

Storage

Kernel

Guest OS

Storage

Kernel

Host OS

Kernel

…

Standard Drivers

Virt. Service Client

Virt. Service Client

VMBus

VMBus

Virt. Service Provider

VMBus

Azure Hypervisor

Hardware

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 6

Microsoft Azure Architecture (1)

Cloud Application

Microsoft Azure

Compute

Storage

Network

SQL Azure

Service Bus

Fabric Controller

Microsoft Azure Fabric (Data Center)

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 7

Microsoft Azure Architecture (2)

The Fabric is a network of interconnected nodes:

Commodity servers

High-speed switches, rooters, load balancers

fiber-optic connections

From the distance the network looks like a weave, or a fabric.

The Azure Fabric Controller is the service which monitors, maintains and

provisions machines.

Core Services of Azure

Compute: Hosting of scalable Services on Windows Server.

Storage: Management of highly available data (not relational).

Network: Resources for communicating within cloud services and

with external applications (Service Bus, virtual networks for linking

Azure and on-premises IT infrastructure).

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 8

The Fabric Controller (1)

The kernel of the cloud operating system

My Service SQL Azure

Manages data center hardware

Manages Azure services

Fabric Controller

Equivalent of OS for single machine

Fabric (Data Center)

Server Data Center

Kernel Fabric Controller

Word

SQL Server

Process Service

Responsibilities:

Windows Kernel

Resource management and provisioning

PC/Server

Service lifecycle management

Service health management

Inputs:

Description of the hardware and network resources it controls

Service model and binaries for cloud applications

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 9

The Fabric Controller (2)

Data centers are divided into clusters

Approximately 1000 rack-mounted servers (“nodes”)

Provides a unit of fault isolation

Inside a cluster

Each cluster is managed by a Fabric Controller.

FC is a distributed application running redundantly on 5 nodes

spread across fault domains.

One FC instance is the primary and all others are kept in sync.

Supports rolling upgrades, and services continue to run even if FC

fails entirely.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 10

Service Deployment – The Service Model

The service model defines the requirements of a service

Roles of the service and role options (VM size, …)

Input endpoints

Local disk storage

Configuration parameters.

Based on the service model the FC can automate the deployment of

the service.

When the service model changes (number of role instances) the FC

tries to upgrade the service.

When a role instance in unhealthy, the FC shifts the instance to a new

VM.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 11

Service Deployment

Packaging of service binaries and service configuration

Upload package to Red Dog Front End Service (RDFE)

Picks a cluster based on preferred

data center region and cluster load.

Deployment steps

Find resources that satisfy constraints:

Hardware requirements

Fault domains

Optimize network proximity

.cspkg file

Service.cspkg

via portal or

management API

RDFE Service

FC

Create role images and start VMs.

Copy roles’ files and launch role instances.

Configure networking

Assign Dynamic and Virtual IP addresses (DIPs, VIPs)

Program the load balancer

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

FC

…

Cluster 1

Cluster n

Slide 12

Service Deployment – Provisioning of Nodes

FC powers on the empty node.

FC downloads maintenance OS

(small OS) and boots it.

Maintenance OS sets up host

partition.

Maintenance OS contains FC Host

agent (for communication with FC).

Maintenance OS downloads Azure

OS VHD (stripped-down version of

Windows Server).

Maintenance OS restarts machine

an boots Azure OS.

Node is now ready to host guest

VMs.

CLC3: Azure

Fabric Controller

Maintenance OS VHD

Azure OS VHD

Host Partition

FC Host Agent

Azure OS

Azure Hypervisor

© J. Heinzelreiter, W. Kurschl

Slide 13

Inside a Node

Node (Physical Server)

Guest Partition 1

Guest Partition n

Role

Instance

Role

Instance

FC Guest Agent

FC Guest Agent

Host Partition

FC Host Agent

Primary FC

Image Repository

(OS VHDs, role zip file)

Replicated FC

… Replicated FC

The FC can send commands to the guest VMs via the agents.

The agents send health information (heartbeats) to the FC.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 14

Inside a PaaS VM

Guest Partition

FC Guest Agent

Role Instance

Resource Disk (C:\)

System Disk (D:\)

Role Disk (E:\ or F:\)

VHD

Differencing VHD

Differencing VHD

Windows VHD

Role VHD

The resource disk can be used to store data temporarily.

The system disk contains the OS, a variant of Windows Server

DataCenter x64.

The role disk contains the role assemblies and resources.

Changes to system and role disks are stored in differencing VHDs,

which contain deltas to the base VHDs.

Disk can be reset by simply deleting the differencing VHD.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 15

Fault Tolerance and Availability

Update Domains

Azure updates the Azure OS (Host OS) and Guest OS (only for PaaS

services) automatically.

Updates also take place when new service revisions are deployed.

During updates role instances are not available.

Service roles are partitioned into update domains (def.: 5, max: 20)

No two update domains are updated simultaneously.

Fault Domains

A fault domain is a physical unit of failure, typically a rack.

Service roles are spit up into different fault domains.

Right now the FC makes sure that role instances are spread across at

least 2 fault domains.

Availability SLA:

99.95 % when two or more roles instances are used.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 16

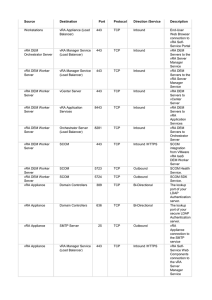

Fault Domains [Kommalapati2010]

Fabric Controller

Azure Fabric

Fault Domain 1

Fault Domain 2

Fault Domain m

Server11

Server21

Serverm1

App 1/Instance 1

App 1/Instance 2

App 2/Instance 2

App 2/Instance 1

App 3/Instance 2

App 3/Instance 2

…

…

…

Server1n

Server2n

App 3/Instance 1

App 4/Instance 1

…

Servermn

App 1/Instance 2

App 4/Instance 1

Switch 1

Switch 2

Switch m

Power Supply 1

Power Supply 2

Power Supply m

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 17

Service Deployment – Summary

Cloud Service with 3 Update Domains

Role A: 3 Instances

Role B: 2 Instances

Virtual IP address (VIP): myservice.cloudapp.net

Dynamic IP address (DIP)

Fault Domain 1

1

Update Domain

DIP

Load Balancer

Fault Domain 2

Fault Domain 3

1

3

2

2

Azure Fabric

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 18

The Role of the Fabric Contoller – Summary

FC provisions and manages resources.

Application owners specify required resources in resource

descriptors (service models).

The FC automatically provisions the requested resources.

FC guaranties fault tolerance an availability of resources.

Instances are allocated across fault and update domains.

Fault Domains: Units of failure in data center (e.g. rack of

machines).

FC detects application failures early.

FC spawns additional instances if needed.

Update Domains: Only one update domain will be updated

simultaneously during upgrades (OS, services).

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 19

Compute Resources – The Service Model

A Cloud Service consists of a set of services.

Each service corresponds to a service type, a so-called role.

Each role can have a arbitrary number of role instances.

Each role instance runs in one VM.

Web Role: are interactive .NET applications hosted in IIS:

Web application or Web service (WCF)

Worker Role are durable background process:

Executes long-running transactions

Often isolated from outside world

There are ways to make it reachable for external applications

The service model is described by two files:

The Service Definition File: topology of the service

The Service Configuration File: configuration parameters

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 20

Compute Resources: Web and Worker Roles

VIP

Load

Balancer

Physical Machine 1

Physical Machine 2

Guest Partition 1

Guest Partition 1

IIS

DIP

Web Role

Instance

FC Guest Agent

Worker Role

Instance

FC Guest Agent

Queue

Host Partition

Host Partition

FC Host Agent

FC Host Agent

Fabric Controller

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 21

Service Model: Service Definition

The ServiceDefiniton.csdef file defines the overall structure of a service.

For each role one can define the following parameters:

vmsize: CPU cores (1 – 16) and memory for VM (1.75– 112 GB)

full/partial trust: native code execution supported

Endpoint: internal and external endpoints (http, https, tcp)

LocalStorage: temporary storage on server running the instance

ConfigurationSettings: names of configuration parameters

upgradeDomainCount: number of update domains

The Service Definition is processed at deployment time.

<ServiceDefinition name="MyService" upgradeDomainCount="10" …>

<WebRole name="MyWebRole" enableNativeCodeExecution="true" vmsize="Medium">

<InputEndpoints>

<InputEndpoint name="HttpIn" protocol="http" port="80" />

</InputEndpoints>

<ConfigurationSettings>

<Setting name="name1" />

…

</ConfigurationSettings>

</WebRole>

</ServiceDefinition>

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 22

Role Size (vmsize)

The role size (vmsize) parameter specifies how many slices of a 8/16core machine you will get.

Each size has a different billing rate.

VM size

Cores

Memory

A0 (ExtraSmall)

Shared

768 MB

Local Storage Characteristics

testing, services with low memory

20 GB

foodprint (ExtraSmall)

A1 – A4

1–8

1.5 – 14 GB 200 – 2000 GB

A5 – A7

2–8

14 – 56 GB 500 – 2000 GB

A8 – A11

8 – 16

56 – 112 GB

D1 – D4

D11 – D14

1–8

2 – 16

3.5 – 28 GB

14 – 112 GB

D1_v2 – D5_v2

1 – 16

3.5 – 56 GB

50 – 800 GB

D11_v2 – D14_v2

2 – 16

14 – 112 GB

100 – 800 GB

382 GB

A1=Small, A2=Medium,

A3=Large, A4=ExtraLarge

compute-intensive applications (HPC)

A8 and A9 support RDMA *)

50 – 400 GB

60 percent faster than A-series, SSDs

100 – 800 GB

35 percent faster than D-series, SSDs

*) RDMA: Remote Direct Memory Access technology: low-latency, high-throughput network.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 23

Service Model: Service Configuration

The ServiceConfiguration.cscfg file

specifies the number of instances for each role, and

defines the values for the configuration settings.

The Service Configuration can be updated at service runtime.

The FC tries to provision the updated number of role instances

The runtime fires change events, the role can subscribe to these

events.

<ServiceConfiguration serviceName="MyService" xmlns= "…">

<Role name="MyWebRole">

<Instances count="3" />

<ConfigurationSettings>

<Setting name="name1" value="value1" />

</ConfigurationSettings>

</Role>

</ServiceConfiguration>

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 24

The Azure Management Portal

The Management Portal provides access to service deployment and

management tasks:

Manage cloud services

Manage storage accounts

Manage SQL Azure

databases

Manage virtual networks

Manage virtual machines

Manage Web sites

The portal is purely HTMLbased.

There is a preview for a new

portal.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 25

Management Portal: Create a Cloud Service

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 26

Microsoft Azure APIs

Azure offers several APIs for managing resources “inside” and

“outside” the cloud.

The Service Runtime API enables you to interact with Azure

environment within roles.

The Storage API provides classes for working with Azure storage

services (Blobs, Tables, and Queues).

A set of classes allows you to manage the credentials of storage

accounts.

The Service Management API is a REST API for managing your services

from “outside” the cloud.

The Diagnostics API enables you to collect logs and diagnostic

information within roles.

There is also a REST API for managing diagnostics remotely.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 27

Azure Service Runtime API

CurrentRoleInstance

Roles

OnStart()

OnStop()

Run()

OnStart()

MyWorkerRole

Roles

MyWebRole

GetConfigurationSettingValue()

RequestRecycle()

OnStart()

Run()

*

Changed (Event)

Changing (Event)

Stopping (Event)

Role

Instances

Names

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

*

RoleInstance

Id

Role

FaultDomain

UpdateDomain

InstanceEndpoints

Slide 28

CurrentRoleInstance

RoleEnvironment

RoleEntryPoint

Implementation of Roles

Derive class from RoleEntryPoint

This is optional for Web Roles.

Override live cycle methods:

OnStart/OnStop: Called when role instance is initialized/stopped.

Run: Intended to run for the life of the role instance in an endless loop.

OnStart can be used to register for changes in role configuration.

public override bool OnStart() {

RoleEnvironment.Changed += (sender, e) => {

var settingChanges = e.Changes

.OfType<RoleEnvironmentConfigurationSettingChange>();

foreach (var change in settingChanges) {

string settingName = change.ConfigurationSettingName;

string newValue = RoleEnvironment.GetConfigurationSettingValue(settingName);

// process changes in settingName

}

};

return base.OnStart();

}

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 29

Implementation of Web and Worker Roles

Web Role

No difference to standard ASP.NET (Web Forms/MVC) or WCF

application.

Run can be optionally overridden.

Worker Role

Override Run() method of RoleEntryPoint. This method serves as

the main thread of execution for the role.

class WorkerRole : RoleEntryPoint {

public override void Run() {

while (true) {

// get next message

// process message

// delete Message

Thread.Sleep(1000);

}

}

}

CLC3: Azure

class WorkerRole : RoleEntryPoint {

public override void Run() {

serviceBusQueue.OnMessage(

(message) => {

// process message

});

}

}

© J. Heinzelreiter, W. Kurschl

Slide 30

Role Endpoints (1)

You can make your service listen to any HTTP(S) or TCP port, either from

inside or outside the cloud.

You have to declare internal and external endpoints in the service definition.

<ServiceDefinition name="MyService" ...>

<WorkerRole name="MyRole" enableNativeCodeExecution="true">

<Endpoints>

<InputEndpoint name="MyExternalEP" port="5000" protocol="tcp" />

<InternalEndpoint name="MyInternalEP" protocol="tcp"/>

</Endpoints>

</WorkerRole>

</ServiceDefinition>

Microsoft Azure

External

App

MyOther MyInternalEP

Role

Load

Balancer

MyExternalEP: External TCP port 5000

CLC3: Azure

MyService

MyRole

Port 5000 is mapped to a random internal port

© J. Heinzelreiter, W. Kurschl

Slide 31

Role Endpoints (2)

You must use the Service Runtime API to determine the right port:

RoleInstanceEndpoint externalEP =

RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["MyExternalEP"];

System.Net.IPEndPoint publicIpAndPort = externalEP.PublicIPEndpoint;

System.Net.IPEndPoint internalIpAndPort = externalEP.IPEndpoint;

When accessing internal endpoints you have to determine which role

instance you want to connect to:

foreach (RoleInstance instance in

RoleEnvironment.Roles["MyRole"].Instances) {

if (WantToConnectTo(instance) {

RoleInstanceEndpoint internalEP =

instance.InstanceEndpoints["MyInternalEP"];

System.Net.IPEndPoint internalIpAndPort = internalEP.IPEndpoint;

// access instance via internalIpAndPort

}

}

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 32

Inter-role Communication

Role instances can communicate directly using TCP or HTTP(S)

connections.

External

App

Load

Balancer

External

Endpoint

Role

Instance 1

Internal

Endpoint

Role

Instance 2

Role

Instance 3

Role

Instance 4

Role instances can also communicate asynchronously via queues.

Preferred method for reliable messaging

See section Azure Queues for details

Role

Instance 1

Role

Instance 3

Queue

Role

Instance 2

CLC3: Azure

Role

Instance 4

© J. Heinzelreiter, W. Kurschl

Slide 33

Inter-role Communication using WCF/TCP (1)

Implementation of the service

xxx

[ServiceContract(Namespace="…")]

public interface ICalculator {

[OperationContract]

double Add(double a, double b);

}

public class CalculatorService :

ICalculator {

public double Add(double a,

double b) {

return a + b;

}

}

Configuration of the worker role

Add external or internal endpoints

<ServiceDefinition name="CalcService" ...>

<WorkerRole name="CalcRole">

<Endpoints>

<InputEndpoint name="CalcServiceEP" port="5000" protocol="tcp" />

<!– option 2: <InternalEndpoint name="MyInternalEP" protocol="tcp"/> -->

</Endpoints>

</WorkerRole>

</ServiceDefinition>

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 34

Inter-role Communication using WCF/TCP (2)

Microsoft Azure

Hosting of the WCF service

Role Instance

Load

Balancer

External

App

net.tcp://myCloudService.

cloudapp.net:5000/CalcService

CalcService

CurrentRoleInstance.

InstanceEndpoints["CalcServiceEP"].IPEndpoint

public class WorkerRole : RoleEntryPoint {

private ServiceHost serviceHost;

public override void OnStart() {

this.serviceHost = new ServiceHost(typeof(CalcService));

}

}

NetTcpBinding binding = new NetTcpBinding(SecurityMode.None);

RoleInstanceEndpoint externalEndPoint =

RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["CalcServiceEP"];

this.serviceHost.AddServiceEndpoint(

typeof(ICalcService), binding,

String.Format("net.tcp://{0}/CalcService",

externalEndPoint.IPEndpoint)); // hostname and port

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 35

Inter-role Communication using WCF/TCP (3)

Client using the external endpoint:

NetTcpBinding binding = new NetTcpBinding(SecurityMode.None, false);

using (ChannelFactory<ICalcService> cf =

new ChannelFactory<ICalcService>(binding,

"net.tcp://myCloudService.cloudapp.net:5000/CalcService")) {

ICalcService calcProxy = cf.CreateChannel();

double sum = calcProxy.Add(1,2);

}

Client using the internal endpoint:

No LB is used less latency for service call.

Client must do the load balancing by itself.

Use RoleEnvironment.Roles["TargetRole"] to discover the endpoints

foreach (RoleInstance ri in RoleEnvironment.Roles["CalcRole"].Instances) {

RoleInstanceEndpoint ep = ri.InstanceEndpoints["MyInternalEP"];

string address = String.Format("net.tcp://{0}/CalcService", ep.IPEndpoint);

var cf = new ChannelFactory<IMyService>(binding, address);

…

}

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 36

Simulation of a Worker Role in a Web Role

Roles provide a physical separation of components.

Each role instance causes additional costs.

If the web role has sufficient compute resources, it can take over the

task of a worker role.

The worker code should be logically separated from the web role.

Approach:

Start worker thread(s) in an appropriate life cycle method (OnStart

or Application_Start).

Use a standard method for passing messages (e. g. queues).

Process messages in worker thread(s).

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 37

The Service Management API

The Service Management API provides programmatic access to most of

the functionality of the developer portal:

List/create/update/delete hosted services

Get/upgrade/delete/swap deployment

Reboot/reimage role instance

List/create/delete/update storage accounts

Get storage account keys

Get/list/add/delete management certificates

Operations on virtual machines and virtual networks

The Management API can be accessed from inside and outside Azure.

The Management API is REST-based.

All operations are performed over SSL.

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 38

Using the Service Management API

Create a X.509 certificate (by using makecert or IIS Manager).

Upload certificate (.cer file containing the public key) through the

portal.

Used to validate the signed request.

Implement the client using the Azure Management Libraries:

X509Certificate2 certificate = new X509Certificate2("mycert.cer");

SubscriptionCloudCredentials creds =

new CertificateCloudCredentials(subscriptionId, certificate);

StorageManagementClient storageClient =

CloudContext.Clients.CreateStorageManagementClient(creds);

StorageAccountListResponse response =

storageClient.StorageAccounts.List();

foreach (StorageAccount storageAccount in response)

Console.WriteLine(storageAccount.Uri);

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 39

Local Storage

Local Storage Resources are directories in the VM’s local file system:

Use it to store temporary data.

Fast storage operations because of no network latency.

Only the role instance hosted by the VM has access to it.

Declare local storage in service definition:

<ServiceDefinition name="MyService" ...>

<WorkerRole name="MyRole">

<LocalResources>

<LocalStorage name="myLocalStorage" sizeInMB="10"

cleanOnRoleRecycle="true" />

</LocalResources>

</WorkerRole>

</ServiceDefinition>

Determine actual storage location with Runtime API:

LocalResource myRes = RoleEnvironment.GetLocalResource("myLocalStorage");

using (FileStream fs = File.Create(myRes.RootPath + @"\tempFile")) { … }

CLC3: Azure

© J. Heinzelreiter, W. Kurschl

Slide 40