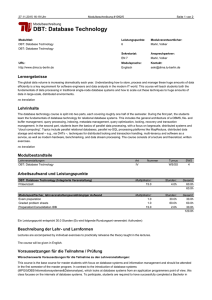

T5.1: Grid Data Management Architecture and

Werbung